Engineering teams practicing DevOps strive to improve the way they build, ship and operate their software while avoiding customer impacting outages. Parts of this problem can be solved through automation that reviews and monitors your codebase before and after release, taking action before a human operator even investigates an event. One of the hardest parts of reducing system outages completely is that software still involves human, and human involvement always brings its own set of challenges.

I’ve flown Helicopters for the past 6 years. One thing I've learnt: Aviation has many similarities to Software Engineering Operations (DevOps) in the way the industry strives for risk minimization and incident avoidance.

I’ve flown Helicopters for the past 6 years. One thing I've learnt: Aviation has many similarities to Software Engineering Operations (DevOps) in the way the industry strives for risk minimization and incident avoidance.

Many engineers are familiar with the concept of writing post-mortems after major outages or operational events. These reports ensure a team takes the time to replay an event in minute-by-minute detail, deeply understand its causes, and take steps to ensure similar events never happen again.

Similarly in Aviation there’s a saying:

“Regulations are written in blood”

The Aviation industry places a large importance on reviewing incidents. This enables the industry to adapt operating procedures and legislation to minimize the chance of the same thing happening again.

The same can be said for engineering team oncall runbooks and operating procedures. These documents are extended and refined to cover gaps in team processes that events highlight.

A valuable section to include in postmortems is a detailed review of the actions leading up to, during and after an event. In hindsight the actions humans take in these critical moments are often themselves opportunities to reduce customer impact related an event.

In Aviation the influence applied during critical situations are called Human Factors. The Federation Aviation Authority (FAA) studies and assists aviators and maintainers avoid Human Factors through training and regulation to ensure pilots and crewman avoid situations where humans mess things up.

“Human factors directly cause or contribute to many aviation accidents. It is universally agreed that 80 percent of maintenance errors involve human factors. If they are not detected, they can cause events, worker injuries, wasted time, and even accidents”

FAA Aviation Maintenance Training Book

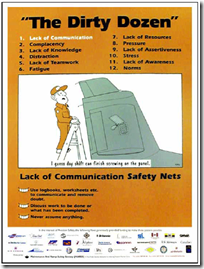

The Dirty Dozen

As part of the FAAs mission to improve aviation safety they study the primary causes of Human Errors in aircraft, sharing these studies so the industry can continually improve safety. For aircraft maintenance specifically, they’ve collected common categories of Human Errors a list they call the “Dirty Dozen”.

As part of the FAAs mission to improve aviation safety they study the primary causes of Human Errors in aircraft, sharing these studies so the industry can continually improve safety. For aircraft maintenance specifically, they’ve collected common categories of Human Errors a list they call the “Dirty Dozen”.

As an engineering leader I couldn’t help but notice the fact that every one of the Dirty Dozen had a parallel in team operational culture.

1. Lack of Communication

“Failure to transmit, receive, or provide enough information to complete a task. Never assume anything.

Studies show that only 30% of verbal communication is received and understood by either side in a conversation. Others usually remember the first and last part of what you say. Improve your communication.”

In simple terms, “see something, say something”. If you notice a chance in a system, let your team know. If you see a colleague doing something risky, say something.

Even more importantly, ensure oncall operators and support staff have situational awareness over changes to the systems they’re support. Consider situations where a breaking change is deployed and a team’s oncall isn’t fully aware of the change, the parts of the team’s systems it touches, or the potential impact of this change. When this release causes an increase in exceptions across your system, the oncall engineer wastes precious minutes log diving, instead of rolling back the change. Ensuring those who operate a service (oncall) are aware of all code and configuration changes are critical.

Effective engineering teams possess the ability to clearly communicate current status, actions team members are planning to take, or changes to systems they’ve noticed. Teams with good communication culture and the right tools can avoid these gaps in context that can lead to errors or extend outages.

2. Complacency

“Overconfidence from repeated experience performing a task. Avoid the tendency to see what you expect to see—

- Expect to find errors.

- Don’t sign it if you didn’t do it.

- Use checklists.

- Learn from the mistakes of others.”

Consider a mature system your team knows well, has no known issues, and mostly leaves alone. Quietly log files have been filling the disks of the hosts it runs on. How are you reviewing the system’s health? What mechanisms does your team have to review key performance and system metrics on a regular basis to ensure nothing changes due to outside inputs?

Many customer impacting events can be avoided through regular proactive review of operational metrics for all a team’s systems, along with mechanisms for collecting and investigating action items to address any anomalies.

3. Lack of knowledge

“Shortage of the training, information, and/or ability to successfully perform. Don’t guess, know—

- Use current manuals.

- Ask when you don’t know.

- Participate in training”

When you hop on a plane, you expect your pilot and crew to be well trained on the safe operation of the aircraft. Being responsible for operating a team’s systems in production should be no different.

While a lot of team knowledge can be learned on the job, oncall operators (just like pilots) need proper training if they’re expected to act if systems are at risk of failure during an event.

To ensure those who go oncall are prepared, ensure your team has processes to ramp up your oncall and support staff. Author runbooks containing common failure cases for your team’s systems, and ensure operators review them before going oncall. Keep these up to date as your systems change.

Create training processes to give oncall operators the ability to “dry run” or shadow others before going oncall themselves so they can learn not just common failure modes of your systems, but the tools and practices your team uses to investigate them. Above all, ensure no one goes oncall before they’re ready and are confident they can ensure customers are in safe hands while they “have the conn”.

4. Distractions

“Anything that draws your attention away from the task at hand.

Distractions are the #1 cause of forgetting things, including what has or has not been done in a maintenance task. Get back in the groove after a distraction—

- Use checklists.

- Go back 3 steps when restarting the work.”

Aviation mechanics and Pilots alike are taught that during critical situations, they need an environment that allows them to focus. The FAA even has a regulation called the “Sterile cockpit” rule created after an incident where an aircraft crashed due primarily because the pilots were talking about “politics and used cars”.

Similarly, the same applies to releasing and operating software. Ensuring those who are oncall are able to focus on operations, and not get distracted by requests to build features or investigate non-operational issues that may distract them, reducing focus of the state of systems if they’re required to act if the need arises.

Just as important is the environment you and your team create during an event. An engineers first job during an outage, should be to address any customer impact. This is harder to focus on if your team and stakeholders immediately gather round, or reach out asking for updates. Escalating to a secondary team member or manager to help with these tasks ensures an operator can focus on addressing the issue, greatly improving their chances of reducing the time-to-resolve an event.

5. Lack of teamwork

“Lack of Teamwork.

Failure to work together to complete a shared goal. Build solid teamwork

- Discuss how a task should be done.

- Make sure everyone understands and agrees.

- Trust your teammates.”

Many in Aviation have egos (definitely not an issue in engineering, right?), however, egos are put to the side when it comes to safety. Engineering teams should be no different. Safe operation of a team’s systems rely on strong judgement and a trust in each other that decisions are being made because of strong judgement, not ego or fear of retribution.

6. Fatigue

“Fatigue Physical or mental exhaustion threatening work performance. Eliminate fatigue-related performance issues

- Watch for symptoms of fatigue in yourself and others.

- Have others check your work.”

Going oncall can be tiring. Team members may need to work strange hours of the day, and when combined with the stress and adrenaline during busy periods, it’s only natural that they become tired. There’s nothing worse than a tired engineer making a simple mistake while attempting to fix a production issue, making things worse, simply because they’re over tired. Teams and their managers need to be aware of the health and state of those currently oncall to ensure that they’re able to effectively act when needed. Reducing shift length, or swapping out those who are overtired during a busy operational period is an easy way to reduce this risk. Managers should positively reinforce this behavior to ensure no one puts a concern of being seen as “weak” ahead of ensuring customers’ needs are seen to during an outage.

7. Lack of resources

“Lack of Resources.

Not having enough people, equipment, documentation, time, parts, etc., to complete a task. Improve supply and support

- Order parts before they are required.

- Have a plan for pooling or loaning parts.”

When aircraft mechanics make repairs, its critical that they have the right training, access to the right parts, and correct tooling. They need to be prepared to complete the maintenance task correctly.

Software engineers suffer from working with access to almost unlimited information, and with this comes a tendency to take on tasks never completed before, under tight timelines. When it comes to upgrades and maintenance actions, its important that you’re prepared. Researching and planning the task, and discussing it with others who have done something similar reduces the risk you’ll cause an outage due to rushing, pressure to get it done quickly (see 8), or cut corners to overcome your lack of preparedness (see 12).

8. Pressure

“Pressure Real or perceived forces demanding high-level job performance. Reduce the burden of physical or mental distress

- Communicate concerns.

- Ask for extra help.

- Put safety first.”

Have you ever felt pressured by stakeholders (or even yourself) to release an untested fix, or complete a high risk action without assessing other options?

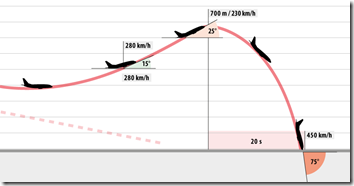

A large cause of small aircraft crashes every year are due to what the industry called “Get-there-itis”, where pilots make poor decisions attempting to get to their destination, even if conditions present that would normally lead to them cancel a flight or deviate to an alternate airport. A whole section of the FAA pilot syllabus tries to address this by presenting frameworks for Aeronautical Decision Making (ADM). The goal of this training is to teach pilots skills to avoid making poor decisions due to outside or self inflicted pressure of some kind.

Humans have a tendency to make poor decisions or take actions they know are unsafe when under pressure. In software engineering, often the risks associated with these types of actions can be avoided just by postponing them until a time when you’ve had more time to test, collect data, or take protective measures such completing backups or peer reviews before taking an action.

9. Lack of assertiveness

“Failure to speak up or document concerns about instructions, orders, or the actions of others. Express your feelings, opinions, beliefs, and needs in a positive, productive manner

- Express concerns but offer positive solutions.

- Resolve one issue before addressing another”

When pilots are in “controlled airspace” being directed by aircraft traffic controllers, they are still “in command”. The responsibility of the safe operation of their aircraft, and any passengers or crew rests with them. If ATC directs them to take an action that is dangerous, or outside of their own personal minimums, they can decline the direction.

The same applies to engineers responsible for operating systems in production – their goal is to protect customers, and maintain their systems, even if they are given guidance that goes against this.

Ensuring everyone on a team has a safe space to raise concerns is critical to avoiding mistakes (or making them repeatedly). A lot has been written about the impacts “rockstar” engineers who speak over, shout down, or undermine the ideas of those around have on team morale. One of the more toxic parts of this behavior is its cooling effects on open discussion amongst a team.

Not everyone is extraverted and loud in fighting for their view points, but even more so those who aren’t will happily keep their mouse shut if a team has a culture of dismissing their opinion. Leaders need to ensure this is the case to avoid the unneeded customer impact from operational events that could have been avoided if everyone shared their opinions.

10. Stress

“Stress A physical, chemical, or emotional factor that causes physical or mental tension. Manage stress before it affects your work

- Take a rational approach to problem solving.

- Take a short break when needed.

- Discuss the problem with someone who can help.”

People don’t make great decisions when under stress. Whether you’re flying a plane, building software, or oncall, being in the right state of mind leads to better decisions. While many software teams operate under tight timelines with a drive to deliver, its important to drive a sense of calm during times of critical importance.

In my experience teams who are the most successful at reducing stress during launch activities or operational events are those who are proactive about accounting for it. Consider ways to give your team time and space to address issues, such as accounting for time to address unknowns in your project timelines, or addressing root causes of oncall issues so operators’ jobs get easier over time. Even the way team members communicate during these crucial times can have a big impact on team stress – make an effort to speak in calm, matter of fact tones, and avoid alarmist rhetoric.

11. Lack of awareness

“Lack of Awareness Failure to recognize a situation, understand what it is, and predict the possible results. See the whole picture

- Make sure there are no conflicts with an existing repair or modifications.

- Fully understand the procedures needed to complete a task.”

Pilots and aircrew develop checklists, processes and diagnostic tools to assess their readiness and that of their aircraft.

Similarly, ensuring that teams have the right tooling, access to good metrics and log diving abilities, monitors and alarms, and centralized dashboards is critical to ensuring engineers have visibility into their systems. If your team are yet to have the capability to review graphs and metrics that represent how their system is behaving real-time, and historically, this limits their ability to address issues that occur.

12. Norms

“Norms Expected, yet unwritten, rules of behavior. Help maintain a positive environment with your good attitude and work habits

- Existing norms don’t make procedures right.

- Follow good safety procedures.

- Identify and eliminate negative norms”

Safety in aviation comes with a lot of “Process” is a capital P. In recent years I've seen a trend in software to move away from creating strong team processes, in exchange for freedom for team members to take their own approach. This creates an environment where it is seen OK to go against well established best practice.

Some of the largest outages are caused by teams developing norms that go against known best practice. Consider the following statements and their worst case scenarios:

“Its ok for developers to have ‘delete’ or ‘drop’ permissions to production databases so they can delete test data. Everyone does it”

“I know we model our load balancers in CloudFormation/Terraform, but I just need to make this one quick manual port configuration change. I’ll update our model later”

“Encrypted customer data backups are slow to work with in development. We’ll enable encryption after we launch.”

Establishing (and adhering) to norms that ensure your team doesn’t learn bad habits is an easy way to minimize the risk of human error.